Almost Timely News: 🗞️ Setting the Record Straight on AI Optimization (2025-06-22)

Almost Timely News: 🗞️ Setting the Record Straight on AI Optimization (2025-06-22)Lies, damn lies, and snake oilAlmost Timely News: 🗞️ Setting the Record Straight on AI Optimization (2025-06-22) :: View in Browser The Big Plug👉 Download the new, free AI-Ready Marketing Strategy Kit! Content Authenticity Statement95% of this week's newsletter was generated by me, the human. You will see bountiful AI outputs in the video, and you will see an output from Anthropic Claude in the text. Learn why this kind of disclosure is a good idea and might be required for anyone doing business in any capacity with the EU in the near future. Watch This Newsletter On YouTube 📺 Click here for the video 📺 version of this newsletter on YouTube » Click here for an MP3 audio 🎧 only version » What's On My Mind: Setting the Record Straight on AI OptimizationOkay, let's clear the air on this whole AI optimization and the twisted, contorted, crazy space of AI optimization. There are so many weird, confusing names for all of this that it sounds like either a child's nursery rhyme or IKEA furniture names - GAIO, GEO, AIO, AEO, CSEO (conversational search/SEO), etc. We need to lay down some basics so it's clear what's real and what's not, something you can take to your stakeholders when they ask - and a way to cut through a lot of the snake oil. Part 1: DefinitionsFirst, let's be clear what we're talking about. Fundamentally, what everyone wants to know is this: Can we tell how much traffic (and therefore prospects, leads, opportunities, sales, and ultimately revenue) generative AI in all its incarnations is sending us, directly or indirectly? From that blanket statement, we decompose into three major areas.

And from there, we ask the logical question: how can we get these different systems to recommend us more? When we talk about whatever the heck we're calling this - for the rest of this newsletter I'm sticking with AI optimziation - we're really talking about the three whats.

You'll note something really important. The three major areas all tend to get lumped together: AI models, AI-enabled search, AI replacements for search. They should not be. They are not the same. This will become apparent in part 3. Part 2: What You Cannot KnowThis is a fundamental principle: AI is not search. Let's repeat that for the folks in the back who weren't paying attention. AI IS NOT SEARCH. When was the last time you fired up ChatGPT (or the AI tool of your choice) and typed in something barely coherent like "best marketing firm boston"? Probably never. That's how we Googled for things in the past. That's not how most people use AI. Hell, a fair number of people have almost-human relationships with their chat tools of choice, giving them pet names, talking to them as if they were real people. What this means is that it's nearly impossible to predict with any meaningful accuracy what someone's likely to type in a chat with an AI model. Let's look at an example. Using OpenAI's Platform - which allows you direct, nearly uncensored access to the models that power tools like ChatGPT, let's ask about PR firms in Boston. I asked it this prompt: "Let's talk about PR firms in Boston. My company needs a new PR firm to grow our share of mind. We're an AI consulting firm. What PR firms in Boston would be a good fit for us?" o4-mini

GPT-4.1

GPT-4o

You can see just within OpenAI's own family of models, via the API, I get wildly different results. The most powerful reasoning model available by API, the thinking model, comes up with very, very different results - but even GPT-4o and GPT-4.1 come up with different results. This is what the models themselves know. When you use any tool that connects to OpenAI's APIs, you are using this version of their AI (as opposed to the ChatGPT web interface, which we'll talk about in a bit). Now, suppose I change just a couple of words in the prompt, something reasonable but semantically identical. What if I chop off the first sentence, for a more direct prompt: "My company needs a new PR firm to grow our share of mind. We're an AI consulting firm. What PR firms in Boston would be a good fit for us?" What do we get? o4-mini

GPT-4.1

GPT-4o

Surprise! Same model family, same vendor, wildly different results. This is why it’s generally a fool’s errand to try to guess what any given AI model will return as its results. Just a few words’ difference can lead to very, very different results - and this is for a very naive conversational query. What would happen if you were to use the conversational tone most people use? Instead of a brusque, search-like query, you asked in a way that reflected your own personality? "Hey Chatty! Good morning. Hey listen, my company needs a new PR firm to grow our share of mind. We're an AI consulting firm And we’ve tried PR firms in the past. Boy, let me tell you, some of the firms we’ve tried have been real stinkers. Half of them charge an arm and a leg for work that you could do, and the other half are firms filled with navel-gazing thought leaders who don’t produce any results. We’re in the Boston area (go Sox!) and I wonder who you’d recommend for us. Got a list of PR firms that are actually worthwhile?“ Good luck attempting to model the infinite number of ways people could ask AI. So let’s set this down as a fundamental principle of AI optimization: you cannot know what people are asking AI. Anyone who says you can know this is lying. There’s no polite way to say that. They’re lying - and if they’re asking for your money in exchange for supposed data about what people are asking ChatGPT and similar services, then they’re pretty much taking your money and giving you raw sewage in return. Part 3: What Can You Know?Now that we understand that AI optimization isn't one thing, but three separate things, we can start to pick apart what we can know. AI ModelsCan we know what AI models know about us? Yes and no. AI models - the core engines that power the AI tools we all know and use - are basically gigantic statistical databases. There are over 1.8 million different AI models, and dozens of foundation models, state of the art models that we use all the time. Here are just a sampling of the models:

What's the point of this catalog? Depending on where you are in the world, and what software and vendors you use, there’s a good chance one of these models is helping answer questions. For example, let’s say you’re using Microsoft Copilot at work. (I’m sorry.) You’re not just using one AI model - you’re using, behind the scenes, several. Microsoft Copilot, to contain costs, invisibly routes your query to the model Microsoft thinks can accomplish your task at the lowest cost possible, so it might route it to Phi 4 (its own in-house model) or one of OpenAI’s models, if Phi 4 isn’t up to the task. It’s a good idea to generally know what models power what systems. We know, for example, that OpenAI’s models power ChatGPT. That’s pretty much a given. Google’s Gemini powers… well, it seems like all of Google these days. They’re cramming Gemini into any place they can. Meta’s Llama powers all the AI within Meta’s apps, like Instagram, Facebook, Threads, and WhatsApp, so if you’re a social media marketer in the Meta ecosystem, knowing what Llama knows is helpful. And tons of companies behind the scenes are running local versions of DeepSeek because it’s a state of the art model you can run on your own hardware. If your company suddenly has a private-label AI hub that performs well, and it didn’t a few months ago, that’s probably why. To know what these models know about us, we'd have to go model by model and ask them in as many ways as we could what they know. This is, computationally, a nightmare. Why? The cost to ask each of these models a million different questions about the million different ways you think about a topic would be astronomical. Here’s why knowing the models does matter, and something you can know at relatively low cost. In order for AI to answer any questions, it has to have some level of knowledge about you. One thing we’d want to know is what the latest knowledge it has about us - because providing training data to AI models (aka large amounts of text on the Internet) is a key strategy for influencing them. BUT, and here’s the big BUT, many AI model makers have been using more and more synthetic data (AI-generated) to train new versions of their models. Combine this with the very, very long time it takes to make models, and AI models often seem frozen in time, badly out of date. Here’s how we can know that. Go to the developer portal for any AI model - not the consumer web interface. For example, I would go to Google AI Studio or OpenAI Platform Playground or Anthropic Console. These are the developer interfaces where you can talk to the model directly with no add-ons at all. Ask them a straightforward question like this: “What is the most recent issue of the Almost Timely newsletter at christopherspenn.com that you know about? Provide the exact title, date of publication in YYYY-MM-DD format, and the URL in this format:

Let’s see what the answers are from the newest models:

We can see from this experiment that most models' knowledge of my newsletter ends a year ago. This is normal and expected; the process of training a new model takes 6-9 months, and then 3-6 months of safety testing and QA. So that's what the models themselves know. It's valuable to understand this because if the base, underlying model has no knowledge of us, everything else is going to be in trouble. This is a key point - AI tools that we'll discuss in a minute use other forms of verification like search to validate what they're saying, but if the underlying models have no clue you exist, they won't even know you should be in the results. AI-Enabled SearchThe second category of AI optimization we care about is AI-enabled search. This is where traditional search engines put AI assistance within the search results. Google's AI Overviews do this, as do DuckAssist from DuckDuckGo, and of course Copilot in Bing. AI overviews summarize the search results, synthesizing an answer. One of the big questions everyone has about Google's AI Overviews is how they work. As with so many things, Google has documented how the system works; the challenge for us is that they've done so in dozens of different places, such as research papers, conference submissions, patents, and of course, marketing material. Here's how the system works; I made this summary with Anthropic Claude Sonnet 4 from my massive NotebookLM collection of 30+ patents and research papers from Google. Google AI Overviews: User Journey Step-by-StepPhase 1: User Input & Query Analysis1. 👤 User Input

2. 🔍 Query Analysis

3. 🤔 Complexity Decision PointIF query is complex or multi-faceted:

IF query is straightforward:

Phase 2: Information Retrieval4a. 📊 Query Fan-Out (Complex Queries)

4b. 🎯 Direct Search (Simple Queries)

5. 🌐 Comprehensive Information Retrieval

Phase 3: AI Processing & Draft Creation6. 🤖 AI Processing Initiation

7. 🔀 Drafting Strategy DecisionIF using SPECULATIVE RAG approach:

IF using standard approach:

8a. ⚡ Parallel Draft Generation

8b. 📝 Single Draft Generation

9. ✍️ Abstractive Summarization

Phase 4: Quality Assurance & Verification10. ✅ Factual Verification

11. 🛡️ Safety & Bias Filtering

12. 📊 Confidence Assessment

13. 🚦 First Confidence GateIF confidence is LOW:

IF confidence is HIGH:

Phase 5: Output Preparation14. 🔗 Source Attribution

15. 🎯 Final Quality Gate

16. 🚥 Display Decision PointIF AI Overview meets quality standards:

IF quality concerns remain:

Phase 6: User Output & Display17. 🤖 AI Overview Displayed

18. 📋 Traditional Search Results (Fallback)

I've got a long, long list of the sources I used, with NotebookLM, to assemble this information, which you can find at the very, very end of the newsletter. Props to Mike King over at iPullRank for the inspiration and the reminder that Google Patents is a rich source of information (and one preferred by the late, great Bill Slawski of SEO by the Sea) alongside academic research by Google. So what? What are we supposed to learn from this massive, tangled mess? What can we know? Phase 2 is the most critical part of the process. It's the heart of where Google is getting its information for AI Overviews. And it's powered by... Google Search. Which in turn means if you want to do well with AI Overviews... do well with Google Search. That's probably not the super high tech, super mysterious AI takeaway you were hoping for, but it's the truth. So for all those folks who are saying, "Fire your SEO agency! Everything is different now!" No, no it isn't. And this is not a terrible surprise. For a system like AI Overviews to work well AND quickly, Google has to leverage the data it already has and has spent decades tuning. Additionally, this is an area where we can get solid data, because there are companies like Semrush and AHREFS that are running thousands of cheap simulations with known search queries (like "best PR firm boston") to see what triggers AI overviews and what the results are - and so can you. You could literally just run through your entire SEO keyword list and see what comes up (or better yet, automate the process). Despite appearing like a conversation with generative AI, Google AI Mode shares much more in common with AI Overviews (such as query fan-out) than talking straight to Gemini. That's why it's in category 2, AI-assisted search, rather than AI replacements for search. Google has also said we'll get some level of AI Mode data in our Google Search Console feeds. AI Replacements for SearchThis brings us to the third bucket of AI optimization, which is AI replacements for search. These are the consumer-facing apps that we all know and enjoy, from ChatGPT to Gemini to Claude, in mobile app form or website. You might say, okay, how is this different than the AI models? The models are the engines of generative AI. A web interface or a mobile app like the ChatGPT app contain the same engine, but there's a lot of extra stuff around it. OpenAI built the rest of the car, just like the Claude app has a lot of stuff around it. This is necessary because AI models are useless without an interface for the average person. AI interface makers like OpenAI have added a ton of features to make their apps user-friendly, accessible, and simple. Features like web search and search grounding. What does this mean? When a generative AI tool like ChatGPT has low confidence in its response, it can use its own built-in web search to validate that it's not making things up. Here's a simple example. Suppose I ask the GPT-4.1 model this question: "What good news has Quarterhorse PR CEO Nicole Bestard had to say recently?" The response it gives is rooted in the knowledge it was trained on, which is to say not much: Recently, Quarterhorse PR CEO Nicole Bestard shared good news regarding her company’s growth and progress. Most notably, she celebrated Quarterhorse PR’s 1-year anniversary, acknowledging both the company’s successful journey over the past year and the support from clients and partners. Nicole Bestard expressed excitement for the future and gratitude for the relationships built, marking this milestone as a testament to her team’s hard work and the trust their clients have placed in Quarterhorse PR. Given that Quarterhorse was founded in 2020, it's safe to say GPT-4.1 doesn't know much on the topic. Ask the same question in ChatGPT using the GPT-4.1 model, and you get a very different, more accurate story: Nicole Bestard, CEO & Principal of Quarter Horse PR, has shared some uplifting and inspiring “good news” recently—here are a few standouts: 🌞 Solar Farm + Sheep Story That is driven by its internal search. Now, revisiting part 2, there is absolutely, positively no way to know what people are typing into tools like ChatGPT. A company like OpenAI is NEVER going to share that information willingly because of both privacy and profit reasons. Privacy, to give users the illusion that what they type into AI tools is private, and profit, because user-submitted information is the crown jewel of any AI company. User-submitted information is how AI companies calibrate and build better models. But we can know quite a lot. First, we can know what sources these tools draw from - and they all draw from different sources. That same query across three different apps gives a variety of different sources. Gemini only pulls from the QHPR website and PR Daily. Claude hit up LinkedIn, Twitter, PR Daily, PR Week, and a few dozen other sites. ChatGPT hit up LinkedIn, the QHPR website, YouTube, BusinessWire, and a few dozen other sites. That tells us a great deal - namely, where we should be placing information so that it's findable by these tools. The second major thing we can know is when a human clicks on a link from a generative AI tool, we can see the referral traffic on our websites. We have no idea what they typed to spur the conversation, but we can see the human clicked on something AND visited our website, which also means we can know what page they landed on, what pages they visited, and whether they took any high-value actions. I have an entire guide over on the Trust Insights website - free, no information to give, nothing to fill out - on how to set up a Google Analytics report to determine this. Part 4: What Can You Optimize?Okay, now that we've been through an exhaustive tour of the three categories and their systems, we can talk about what we have control over, what we can optimize. If you missed it, I have an entire 8-page guide about how to optimize for AI, free of cost (there is a form to fill out) here. I'm not going to reprint the entire thing here. Here's the very short version. For category 1, AI models themselves, they need training data. Lots of training data - and AI model makers ingest training data very infrequently. The tests we did for category 1, measuring when the last update a model had about us, is a good proxy for how often those updates happen. It's about once a year, give or take. That means you have to have a LOT of content, all over the web, in as many places as possible, in the hopes that the next time a model maker scoops up new training data, your piles of new data will be in it. In terms of how to do that, it's all about generating content, so be as many places as you can be. I've said in the past, one of my blanket policies is to say yes to any podcast that wants to interview me (and that's still my policy) as long as the transcripts and materials are being posted in public - especially on YouTube. For category 2, AI-assisted search, that is still basically SEO. Yup, good old-fashioned SEO the way you've been doing it all along. Create great quality content, get links to it, get it shared, get it talked about as many places as you can. For example, you'll note in the example above that both Claude and ChatGPT hit up LinkedIn quite hard for data, so be everywhere and have your content talked about everywhere. For category 3, AI replacements for search, do the exercises I recommended. Take some time to do the searches and questions and discussions in the major AI tools about your brand, your industry, your vertical, but instead of looking at the generated text, look at the sources. Look at where AI tools are getting their information for search grounding, because that is your blueprint for where to invest your time and effort. Part 5: Wrapping UpThere is a TON of snake oil being peddled in the AI optimization space. Everyone and their cousin has a hot take, and as actual SEO expert Lily Ray said recently, a lot of people have very strong opinions about AI optimization backed up by absolutely no data whatsoever. That's why I spent so much time finding and aggregating the patents, research papers, and materials. I don't know - and you don't know either - what people are typing into systems like ChatGPT and Gemini. But we can absolutely know how the systems are architected overall and what we should be doing to show up in as many places as possible to be found by them. Later this summer, look for a full guide from Trust Insights about this topic, because (a) it's too important to leave to the snake oil salesmen and (b) I need to get more mileage out of all this data I collected and processed. As with my Unofficial LinkedIn Algorithm Guide for Marketers, it should be abundantly clear that there is no "AI algorithm" or any such nonsense. There are, instead, dozens of complex, interacting, sometimes conflicting systems at play that are processing data at scale for users. There's little to no chance of any kind of "hack" or "growth power move" or whatever other snake oil is being peddled about AI optimization. Instead, there's just hard work and being smart about where and how you create. How Was This Issue?Rate this week's newsletter issue with a single click/tap. Your feedback over time helps me figure out what content to create for you. Here's The UnsubscribeIt took me a while to find a convenient way to link it up, but here's how to get to the unsubscribe. https://almosttimely.substack.com/action/disable_email Share With a Friend or ColleagueIf you enjoy this newsletter and want to share it with a friend/colleague, please do. Send this URL to your friend/colleague: https://www.christopherspenn.com/newsletter For enrolled subscribers on Substack, there are referral rewards if you refer 100, 200, or 300 other readers. Visit the Leaderboard here. Advertisement: Bring Me In To Speak At Your EventElevate your next conference or corporate retreat with a customized keynote on the practical applications of AI. I deliver fresh insights tailored to your audience's industry and challenges, equipping your attendees with actionable resources and real-world knowledge to navigate the evolving AI landscape.  👉 If this sounds good to you, click/tap here to grab 15 minutes with the team to talk over your event's specific needs. If you'd like to see more, here are: ICYMI: In Case You Missed ItThis week, John and I kicked off the Summer Makeover Series with podcast transcription automation.

Skill Up With ClassesThese are just a few of the classes I have available over at the Trust Insights website that you can take. PremiumFree

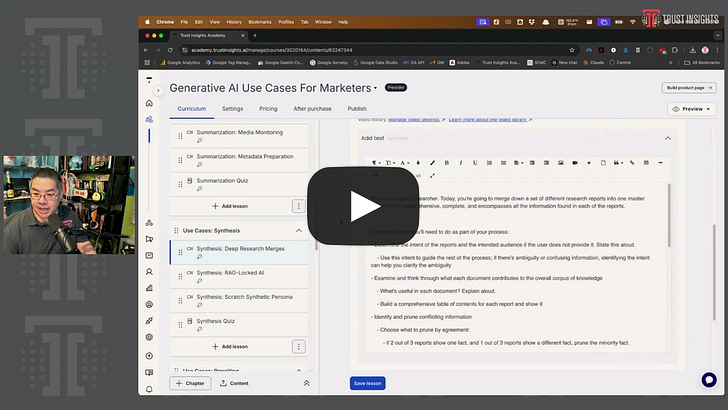

Advertisement: New AI Course!In my new Generative AI Use Cases for Marketers course, you'll learn AI use cases in an entirely new way. The days of simply sitting back and staring at a bewildering collection of confusing use cases and success stories is over. They weren't all that helpful to begin with. In this course, instead, you'll learn the 7 major categories of generative AI use cases with 3 examples each - and you'll do every single one of them. Each example comes with prompts, sample data, and walkthroughs so you can learn hands-on how to apply the different use cases. You'll also learn how to put the use case categories together so you can identify your own use cases, set up effective AI strategy for your real world work, and make generative AI work for you. Every course module comes with audio to go for when you want to listen, like at the gym or while cooking, plus transcripts, videos, closed captions, and data. Sign up today by visiting trustinsights.ai/usecasescourse 👉 Pre-order my new course, Generative AI Use Cases for Marketers! What's In The Box? Here's a 5 Minute TourHere's a 5 minute video tour of the course so you can see what's inside.  Get Back to WorkFolks who post jobs in the free Analytics for Marketers Slack community may have those jobs shared here, too. If you're looking for work, check out these recent open positions, and check out the Slack group for the comprehensive list.

Advertisement: Free AI Strategy KitGrab the Trust Insights AI-Ready Marketing Strategy Kit! It's the culmination of almost a decade of experience deploying AI (yes, classical AI pre-ChatGPT is still AI), and the lessons we've earned and learned along the way. In the kit, you'll find:

If you want to earn a black belt, the first step is mastering the basics as a white belt, and that's what this kit is. Get your house in order, master the basics of preparing for AI, and you'll be better positioned than 99% of the folks chasing buzzwords. 👉 Grab your kit for free at TrustInsights.ai/aikit today. How to Stay in TouchLet's make sure we're connected in the places it suits you best. Here's where you can find different content:

Listen to my theme song as a new single: Advertisement: Ukraine 🇺🇦 Humanitarian FundThe war to free Ukraine continues. If you'd like to support humanitarian efforts in Ukraine, the Ukrainian government has set up a special portal, United24, to help make contributing easy. The effort to free Ukraine from Russia's illegal invasion needs your ongoing support. 👉 Donate today to the Ukraine Humanitarian Relief Fund » Events I'll Be AtHere are the public events where I'm speaking and attending. Say hi if you're at an event also:

There are also private events that aren't open to the public. If you're an event organizer, let me help your event shine. Visit my speaking page for more details. Can't be at an event? Stop by my private Slack group instead, Analytics for Marketers. Required DisclosuresEvents with links have purchased sponsorships in this newsletter and as a result, I receive direct financial compensation for promoting them. Advertisements in this newsletter have paid to be promoted, and as a result, I receive direct financial compensation for promoting them. My company, Trust Insights, maintains business partnerships with companies including, but not limited to, IBM, Cisco Systems, Amazon, Talkwalker, MarketingProfs, MarketMuse, Agorapulse, Hubspot, Informa, Demandbase, The Marketing AI Institute, and others. While links shared from partners are not explicit endorsements, nor do they directly financially benefit Trust Insights, a commercial relationship exists for which Trust Insights may receive indirect financial benefit, and thus I may receive indirect financial benefit from them as well. Thank YouThanks for subscribing and reading this far. I appreciate it. As always, thank you for your support, your attention, and your kindness. See you next week, Christopher S. Penn Appendix: Long List of Citations

Invite your friends and earn rewardsIf you enjoy Almost Timely Newsletter, share it with your friends and earn rewards when they subscribe. |

Comments