Almost Timely News: 🗞️ How To Get Started with Hosted Open Weights AI (2026-02-22)

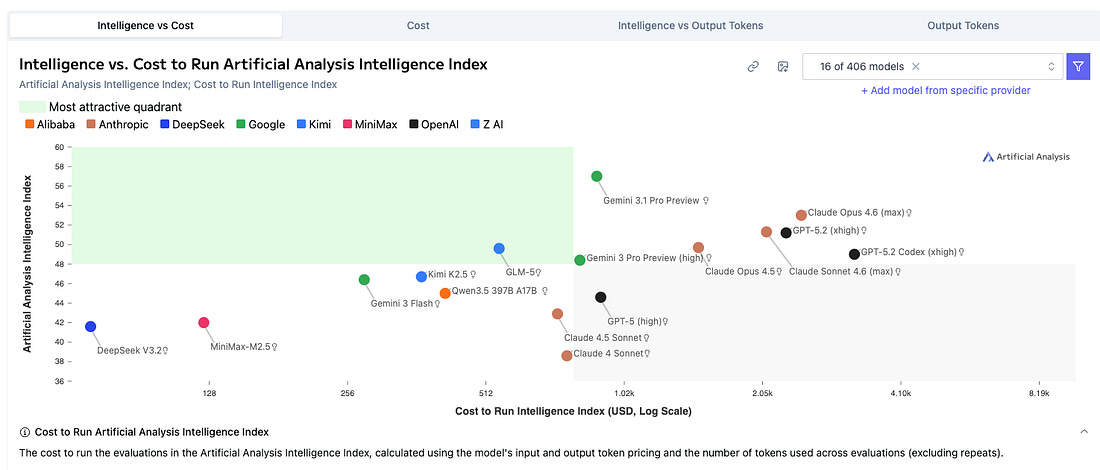

Almost Timely News: 🗞️ How To Get Started with Hosted Open Weights AI (2026-02-22)All the power of AI at 5% of the costAlmost Timely News: 🗞️ How To Get Started with Hosted Open Weights AI (2026-02-22) :: View in Browser The Big PlugTwo things to try out this week: 1. Got a stuck AI project? Try out Katie’s free AI Readiness Assessment tool. A simple quiz to help predict AI project success. 2. Wonder how your website is seen by AI? Try my free AI View tool (limited to 10 URLs per day). It looks at your site and tells you what an AI crawler likely sees - and what to fix. Content Authenticity Statement95% of this week’s newsletter content was originated by me, the human. You’ll see outputs from Claude Code in the opening segment. Learn why this kind of disclosure is a good idea and might be required for anyone doing business in any capacity with the EU in the near future. Watch This Newsletter On YouTube 📺 Click here for the video 📺 version of this newsletter on YouTube » Click here for an MP3 audio 🎧 only version » What’s On My Mind: How To Get Started with Hosted Open Weights AIThis week, let’s talk about using open weights models from a hosted provider. There are many situations where you’d want to use something like a state of the art (SOTA) open weights model but you don’t have the hardware to run it yourself. I’ll show you how to get started, what it will cost (it’s not free), and how to start using them. Part 1: GlossaryIf that all sounded like word salad, then let’s get the table set with some definitions. Open weights: in the world of AI, there are two fundamental types of AI models, closed weights and open weights. Closed weights models are kept secret by providers. You can’t download them or exert much control over them; these are models like OpenAI’s GPT-5.3, or Google’s Gemini 3.1. Open weights models are models that you can download and install on your computer or in a third party provider. The models themselves are usually free. SOTA: state of the art. Generally, this term refers to any AI model that tops benchmark charts. Inference: when AI is generating stuff, it’s called inference. When it’s learning, that’s called training. For end users like you and me, we are almost always doing inference. This is important because we’re looking mainly for inference providers, which is the name of the type of company that hosts open weights AI models. Prompt Caching: when we’re shopping for AI model hosting companies, look for companies that offer solid prompt caching. This saves the unchanging parts of a prompt from task to task, which can result in substantial cost savings. Parameters: parameters are the statistical associations in a model that represent its knowledge. The more parameters a model has, generally speaking, the more knowledge it has. An 8 billion parameter model (which is relatively small) will have much less broad knowledge than an 8 trillion parameter model. The fewer parameters a model has, the more likely it is to hallucinate without access to tools. Tools: in the context of AI, tools are anything that an AI model can use if it’s told is available. The most common tool is web search - when we perform a task that requires external knowledge, a model can fire up a web search to get current information. Other tools include things like the ability to talk to specific applications like your CRM or email inbox, etc. Context window: AI models all have long and short term memory. Their long term memory is encoded in their parameters. Their short term working memory is called a context window, measured in tokens. Tokens: the mathematical unit that AI operates in, typically about 3/4 of a word. When we talk about a model’s context window, it’s measured in tokens. The more tokens a model has in its context window, the more complex and detailed a task it can do. Models like Claude Opus 4.6 and Gemini 3.1 have 1 million token context windows, which means they can work with about 750,000 words at a time. API: short for application programming interface, an API is how software packages talk to each other. Your AI interface connects to an inference provider via an API. Zero Data Retention: A policy used by technology companies that states they do not keep information you send to them. Especially important for AI where your prompts and responses often contain valuable or sensitive information. Part 2: Reasons for Open Weights ModelsLet’s dig into the specific use cases. The most obvious question about open weights models is, why would you want to use an open weights model versus one of the premier SOTA models like Gemini 3.1 or Opus 4.6? If you already have ChatGPT, isn’t that good enough? There are four major reasons to consider open weights models. First is privacy - depending on the inference provider you work with, they may have policies like Zero Data Retention (ZDR). For data that is commercially sensitive (but still allowable with safe third parties), using an inference provider that offers ZDR will be more private than using a commercial provider like OpenAI or Google that may retain your data for 30 days or more - and if you’re using the free versions, your data is probably being used to train future models and is retained in perpetuity. Almost every major SOTA big tech provider has some form of data retention, so if privacy is important to you (and you’re working with material that is still acceptable to be briefly on a third party’s infrastructure), then using open weights models via an inference provider might fit the bill. You still get near-SOTA capabilities but much more privacy. Note that for truly sensitive, confidential data, even a ZDR inference provider is still technically a third party. Use local models hosted on your own infrastructure if you have truly confidential data that cannot ever be in the hands of a third party. The second major reason is cost. Open weights models typically cost much less than their closed weights counterparts. In this chart from Artificial Analysis, we see the typical consultant’s 2x2 matrix - intelligence versus cost. The model that’s just about right is GLM-5 from z.ai, the Chinese AI company Zhipu. Intelligence and cost are tradeoffs in AI - because AI providers bill by token amounts, sometimes a smarter model ends up being cheaper because it requires less thinking and chasing its own tail than a dumber, cheaper model that has to retry a task many times to get it right. Here’s a sense of the costs. Prior to this month, Google’s Gemini 3.0, Claude Opus 4.5 and GLM 5 were comparable on intelligence. Here’s how their API costs compare:

(Pricing is from US-based DeepInfra inference provider for GLM-5.) GLM-5, comparable in intelligence to these two peers, costs in some cases 1/10th of what its competitors cost. Moonshot AI’s Kimi K2.5 model is half the cost of GLM-5, making it 1/20th the cost of Opus 4.5 for similar performance. And coders’ favorite Minimax-M2.5 is half the cost of that, at 27 cents per million tokens in, 95 cents per million tokens out. One important clarification is that when we’re talking about Chinese models like GLM5, et cetera, we are not talking about using them through the Chinese company’s infrastructure. We are talking about using them with an inference provider company of our choosing. Because open weights models are models that anyone can download, there are all these cottage industries of companies that have set up shop that host these freely available models. So be clear, we’re not using a model that is based in the People’s Republic of China. We’re using a model that came from China, but can be used anywhere. The current generation of open weights models, performance wise, are on par with the previous generation of SOTA models (which were current until the end of January 2026, so not that long ago). For 1/20th the cost of the market leader, you could get the same power. Now, if you’re using AI in a chat capacity, that might not mean much, especially if you’re doing one-off tasks like writing a blog post. But if you’re using AI agents, and especially the latest breed of autonomous agents like OpenClaw and its derivatives? Using it with Anthropic’s API (which it was originally designed to do) could cost you hundreds of euros or dollars a month, maybe more. When you switch to an open weights model, you’re cutting your costs by up to 95% for the same level of intelligence. One of the reasons Minimax-M2.5 has become so popular in the last month is because it’s well-suited for agents like OpenClaw - fast, smart, and cheap. This is doubly important for anyone who is on limited resources. For example, Claude Code is incredibly powerful, as an example, but you might not have USD 200 to spend monthly on it. What if you could get the same kind of power and performance on USD 10 a month? Using a model like Minimax-M2.5, if the model is suited for your use cases, would let you have the best of both powers - high intelligence and low costs. I think of my many friends and colleagues who are between jobs right now. Claude Code’s capabilities could make a huge difference for them, but Anthropic’s limits on the free and lowest cost subscriptions put those capabilities out of reach. And they might not have 200 bucks a month to buy the max subscription. Open weights models would let them have that same capability for 10 bucks a month. The third reason is control. Many open weights models have less censorship or different censorship than the models and systems we’re used to. Systems like ChatGPT and Gemini are heavily censored, prohibiting you from asking specific types of requests even if you have a legitimate use case. Open weights models vary in terms of level of censorship from model to model, but because an inference provider gives you access to the raw model itself, chances are you may have more flexibility. You have more control over how AI responds. Whenever I bring this up in conversation, people look at me and wonder exactly what I’m asking AI. I’ll give you a practical use case for wanting to avoid censorship. There’s one client that works in the laboratory reagents space. Their product catalog is a who’s who of nearly every chemical under the sun, including some chemicals that are highly restricted. As a certified reagents provider, they are legally authorized to deal in those chemicals, but if they try to have ChatGPT or similar work on their product catalog, they’re often told they’re asking about restricted things and can’t proceed. For this company to harness the power of AI, they need models and providers that allow them to work with the chemicals and reagents in their catalog, and many open weights models fit that bill. The fourth use case is backups. Sometimes... things break. You show up at work one day and everyone else is panicking that ChatGPT is down or Gemini is down or something else. When you use a separate inference provider, depending on where that provider is located, you might be immune to the outage, allowing you to keep getting things done while everyone else wonders what to do besides stare at an error message. More likely, every provider today that offers tools like Claude Code, OpenAI Codex, Google Antigravity, etc. gives you a certain amount of API usage included with your subscription each week. That usage may or may not go very far depending on the tasks you’re working on. If you have an open weights inference provider that’s pay as you go, then as long as you can keep paying, you can keep going. There are no limits that you have to budget for. Anyone who’s used Claude Cowork or Claude Code has run into that dreaded “You’ve used 97% of your quota” message at some point - usually when you’re in the middle of a critical change or project. Having an open weights provider on standby means you can use Claude Code with your regular plan and when you hit limits, use a tool like Claude Code Router to switch to your open weights provider and keep on going. Part 3: Getting StartedOkay, so that sounds great - open weights models are powerful, cheap, private, capable, and have fewer restrictions. What was state of the art three weeks ago is now commodity today. But how do you actually use these things? How would someone get started? First, you need an interface of some kind. No one ever uses an AI model directly; instead, there’s some kind of wrapper around it, which in nerdspeak is called a harness. Think of the AI model as the engine; the harness is the car that the engine sits in. When we use models through inference providers, we are essentially given the ability to choose the engine AND what kind of car we want to put the engine in. If you’re used to chatting with an environment like ChatGPT, you’ll want some kind of chat interface. As we talked about in a previous issue, you can use desktop apps like Anything LLM and connect them to any inference provider. If you’re a coder, you’ll be able to use the model’s API right inside most coding tools like Cline, Kilo Code, Qwen Code, etc. If you’re a highly technical person, you might want something like Claude Code Router that can let you keep all your settings and tools you’ve built in Claude Code, but change the engine it uses. (It also lets you use Claude Code without an Anthropic subscription, which is a nice plus) Second, you need an inference provider. Here’s a starting prompt to commission any Deep Research report on:

Run this in any Deep Research tool of your choice and examine the results. Double check the privacy policies! There is no substitute for human review when it comes to things like privacy and security, especially if you plan on working with sensitive data. A few providers that I’m familiar with in my region - and none of them have paid to be listed:

Once you’ve chosen your inference provider and set up your account with them - almost all of them require you to put some money down - then you’ll get your API keys to use with your environment. Store them someplace securely. Next, in the interface you’re using, look for how to input your API key and choose your model. Here’s an example; I’m using DeepInfra as my provider, so I have an API key for their service. If I want to use it with Anything LLM, I’d take a screenshot of my settings, the documentation for the app, and this starter prompt:

This will walk you through the setup based on your level of technical skill, helping you get up and running. Part 4: Testing ModelsYour next step is to develop a model test that will help you decide which models to use that best suit your purposes. What do you usually use AI for? For example, suppose you use AI to write LinkedIn posts. Take your top 5 best performing LinkedIn posts you’ve ever written and reverse engineer a prompt out of them. Here’s an example of one tailored specifically for me and my writing style, derived from my own top posts in the last year. Claude reverse engineered this: Once you’ve got your own version of this testbed prompt written, then take something you’d write about and put it in the raw thoughts section. Then in a tool like Anything LLM, run this prompt for every model you want to test out. For good measure, use the exact same prompt with your favorite closed weights model/tool (like ChatGPT) as well. Compare the results. Which model did the best job, in your opinion? This is critically important - you have to be the judge of whether or not the model got the job done. Of the open weights models available to you in your inference provider, which got the closest to the way you actually do the task? And every time a new model comes out, you have a benchmark test that is specifically suited to you that you can evaluate the model with. If you want to be sure, run multiple tests per model to see how it does, especially if you use AI for a variety of tasks. If you’re a more advanced user? Set up a workflow in a system like Claude Code or n8n to programmatically test the different tasks you’d want to evaluate AI on. Maybe you’re a web developer - develop a complex prompt for an infographic or an interactive, and see which model gets closest to the way you’d do it. If you’re a coder, give it a task and your coding standards and see which model produces working code with as few revisions as possible. Whatever the case is, having some way to validate the performance of an AI model is absolutely mandatory if you want to know whether any given model will do what you want. Part 5: Get to WorkNow that you’ve got an inference provider chosen, you’ve got a local interface chosen, and you’ve tested the models, you’re ready to get to work. You’re ready to start using open weights models at a safe, private inference provider for anything you’d normally use a closed weights model provider for. That means you have access to private, secure AI, AI that is on standby (especially with pay as you go providers) for when your normal tool of choice breaks or is unavailable. Anyone who’s been using AI for more than a minute knows that whenever the big tech companies are about to release or do release a new piece of technology, they get overwhelmed by demand and their services are almost unusable for a couple of days while they scramble to meet demand. During periods like that, you’re ready to go, and everyone else at the office will be wondering how you remain productive while they stand around the coffee machine. I’ll share my own story here. I love to use AI to build and make stuff, and Trust Insights fortunately is a company that invests very heavily in both technology and its people. However, there are a ton of little projects on the side I have that have zero business value, like this one video game idea I had that’s just absurd. No business value at all. Side projects like this almost never have business value, and thus when I want to work on them, I’ll switch to DeepInfra and use a highly capable open weights model instead of using company resources. Why? Because we subscribe to Claude’s Max plan, we get a generous amount of usage each week, but we share it as a team. Every token I use for a silly personal project is a token not available for the team to use that week, so to be responsible, I use open weights models for those things instead. (see my January 25th issue about locally hosted open weights models) The same is true for token-intensive tasks that don’t always require the smartest, most expensive models to get them done, like building my daily briefing. Using an expensive, powerful model like Opus 4.6 to assemble a daily briefing from my to-do list and calendar is like taking a fighter jet to the grocery store. Yes, it can do the task very capably, but it’s vast overkill. Using an open weights model gets the job done just as well but doesn’t eat into our weekly token budget for Claude. Another time, I was working on a major client project when Anthropic went down, last summer. It was a big outage, lasting for a good chunk of the workday, but I didn’t miss a step. I switched to an open weights model and was able to keep working while every other Claude user was unable to get anything done. Open weights models should be part of the toolkit for every AI practitioner. You should have options for every major task of economic value you perform, and know - based on the testing you do like the testing in part 4 - which models are best at your specific tasks. Get started today - you might be surprised at how much you can do on a shoestring budget! How Was This Issue?Rate this week’s newsletter issue with a single click/tap. Your feedback over time helps me figure out what content to create for you. Here’s The UnsubscribeIt took me a while to find a convenient way to link it up, but here’s how to get to the unsubscribe. If you don’t see anything, here’s the text link to copy and paste: https://almosttimely.substack.com/action/disable_email Share With a Friend or ColleaguePlease share this newsletter with two other people. Send this URL to your friends/colleagues: https://www.christopherspenn.com/newsletter For enrolled subscribers on Substack, there are referral rewards if you refer 100, 200, or 300 other readers. Visit the Leaderboard here. ICYMI: In Case You Missed ItHere’s content from the last week in case things fell through the cracks:

On The TubesHere’s what debuted on my YouTube channel this week:

Skill Up With ClassesThese are just a few of the classes I have available over at the Trust Insights website that you can take. PremiumFree

Advertisement: New AI Book!In Almost Timeless, generative AI expert Christopher Penn provides the definitive playbook. Drawing on 18 months of in-the-trenches work and insights from thousands of real-world questions, Penn distills the noise into 48 foundational principles—durable mental models that give you a more permanent, strategic understanding of this transformative technology. In this book, you will learn to:

Stop feeling overwhelmed. Start leading with confidence. By the time you finish Almost Timeless, you won’t just know what to do; you will understand why you are doing it. And in an age of constant change, that understanding is the only real competitive advantage. 👉 Order your copy of Almost Timeless: 48 Foundation Principles of Generative AI today! Get Back To Work!Folks who post jobs in the free Analytics for Marketers Slack community may have those jobs shared here, too. If you’re looking for work, check out these recent open positions, and check out the Slack group for the comprehensive list.

Advertisement: New AI Strategy CourseAlmost every AI course is the same, conceptually. They show you how to prompt, how to set things up - the cooking equivalents of how to use a blender or how to cook a dish. These are foundation skills, and while they’re good and important, you know what’s missing from all of them? How to run a restaurant successfully. That’s the big miss. We’re so focused on the how that we completely lose sight of the why and the what. This is why our new course, the AI-Ready Strategist, is different. It’s not a collection of prompting techniques or a set of recipes; it’s about why we do things with AI. AI strategy has nothing to do with prompting or the shiny object of the day — it has everything to do with extracting value from AI and avoiding preventable disasters. This course is for everyone in a decision-making capacity because it answers the questions almost every AI hype artist ignores: Why are you even considering AI in the first place? What will you do with it? If your AI strategy is the equivalent of obsessing over blenders while your steakhouse goes out of business, this is the course to get you back on course. How to Stay in TouchLet’s make sure we’re connected in the places it suits you best. Here’s where you can find different content:

Listen to my theme song as a new single: Advertisement: Ukraine 🇺🇦 Humanitarian FundThe war to free Ukraine continues. If you’d like to support humanitarian efforts in Ukraine, the Ukrainian government has set up a special portal, United24, to help make contributing easy. The effort to free Ukraine from Russia’s illegal invasion needs your ongoing support. 👉 Donate today to the Ukraine Humanitarian Relief Fund » Events I’ll Be AtHere are the public events where I’m speaking and attending. Say hi if you’re at an event also:

There are also private events that aren’t open to the public. If you’re an event organizer, let me help your event shine. Visit my speaking page for more details. Can’t be at an event? Stop by my private Slack group instead, Analytics for Marketers. Required DisclosuresEvents with links have purchased sponsorships in this newsletter and as a result, I receive direct financial compensation for promoting them. Advertisements in this newsletter have paid to be promoted, and as a result, I receive direct financial compensation for promoting them. My company, Trust Insights, maintains business partnerships with companies including, but not limited to, IBM, Cisco Systems, Amazon, Talkwalker, MarketingProfs, MarketMuse, Agorapulse, Hubspot, Informa, Demandbase, The Marketing AI Institute, and others. While links shared from partners are not explicit endorsements, nor do they directly financially benefit Trust Insights, a commercial relationship exists for which Trust Insights may receive indirect financial benefit, and thus I may receive indirect financial benefit from them as well. Thank YouThanks for subscribing and reading this far. I appreciate it. As always, thank you for your support, your attention, and your kindness. Please share this newsletter with two other people. See you next week, Christopher S. Penn Invite your friends and earn rewardsIf you enjoy Almost Timely Newsletter, share it with your friends and earn rewards when they subscribe. |

Comments